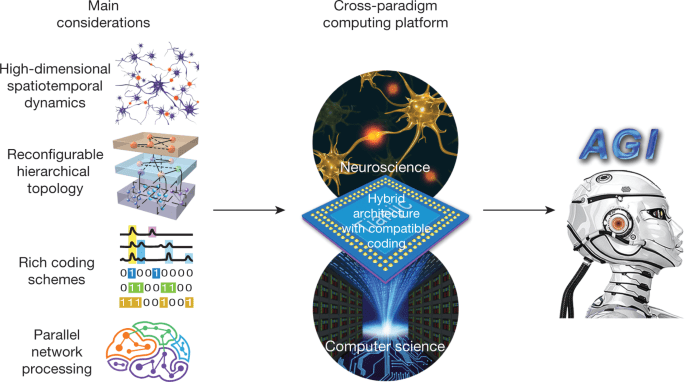

Artificial general intelligence (AGI) refers to a hypothetical type of artificial intelligence that can perform any intelligent task that humans can perform. Although AGI does not exist today, it is an area of active research and development, and some experts believe this could be achieved in the coming decades.

While the potential benefits of AGI are immense, including advances in areas such as medicine, transportation and manufacturing, the development of this technology also carries significant risks. There is concern that AGI may become uncontrollable or hostile.

One of the main concerns is that AGI will soon surpass human intelligence and allow us to design and build even more sophisticated artificial intelligence systems, leading to an intelligence explosion that can be difficult or impossible to control. It is possible to connect. This could lead to scenarios in which AGI becomes so powerful that it threatens the survival of humanity.

There are also concerns about the potential for AGI to be used for malicious purposes, such as cyberattacks and creating autonomous weapon systems. These concerns have led to calls for the development of ethical policies and regulations to ensure that AGI is developed and used in a safe and responsible manner.

Another potential risk of AGI is that it can exacerbate existing social and economic inequalities. If AGI were primarily used by large corporations and wealthy individuals, it could widen the gap between rich and poor, as those with access to the technology would have a significant advantage over those without. It is important to note that many experts believe the risks associated with AGI can be mitigated through careful research, development, and regulation. This includes ensuring that AGI systems are developed with security in mind, are subject to rigorous testing and monitoring, and are developed in a transparent and collaborative manner that addresses the concerns and perspectives of a wide range of stakeholders.

Finally, the risks associated with AGI underscore the need for continued research, debate, and debate on the ethical and social implications of artificial intelligence. When developing AGI, it is important to take a thoughtful and proactive approach so that it is used in ways that benefit humanity as a whole, rather than undermine safety and well-being.

Reference:

- Bostrom, N. (2014). Superintelligence: Paths, dangers, strategies. Oxford University Press. This book discusses the potential risks associated with AGI and explores different strategies for ensuring that it is developed and used in a safe and responsible manner.

- Tegmark, M. (2017). Life 3.0: Being human in the age of artificial intelligence. Knopf. This book discusses the potential benefits and risks of AGI and explores different scenarios for how it could be developed and used.

- Russell, S. J., & Norvig, P. (2010). Artificial intelligence: A modern approach. Prentice Hall. This textbook provides a comprehensive introduction to the field of artificial intelligence, including discussions of the potential risks and ethical considerations associated with AGI.

- Future of Life Institute. (2018). Asilomar AI principles. These principles outline a set of guidelines for ensuring that AGI is developed and used in a safe and ethical manner, including the importance of transparency, collaboration, and ongoing research.

For more interesting articles, browse here.